In 2006, Clive Humby famously said that data is the new oil. The next 15 years have shown that Humby was right about the dominant role of data in modern business. Behind the latest business giants, there are no longer fossil raw materials, but clever ways to refine and utilize vast amounts of data.

Comparing data with oil is in many ways a fitting metaphor. There is value in data only after the data is processed and refined. However, there is a key difference. Unlike oil, data is not limited, it is renewable, reusable and in the future the amount of data will only continue to grow. Data is seen as an asset for streamlining existing business and processes, developing new products and data models, and creating new business. The importance of data in the business has grown significantly and growth is only accelerating.

Data driven organizations need a refinery for their data streams

Contrary to what was feared with oil in the '70s, the stumbling block in data is not so often the scarcity of data. Challenges can often be encountered in data collection, processing, and distribution for business use. In this blog post, I focus on the technological backbone required to gain value out of data. This backbone is a Data Platform.

As we continue along the path of metaphors, it’s a good idea to think about data as a continuous and evolving stream of information instead of a traditional static view where data is a file on a system. Data flows can travel directly from one business software to another, or streams can be directed on the way through a unified refinery. A refinery, or, in the case of data, a Data Platform, is a collection of tools and processes that refine the data into usable format. In the Data Platform data gets necessary business logic and is combined with related information. The data then flows forward towards the final use cases.

Larger organizations have found that in the complex reality of enterprise systems, directing most data flows to same platform for processing and storing is crucial. There are too many systems and applications to connect directly. If the data flows back and forth between all the relevant systems point-to-point complexity increases exponentially.

When you have decided that it is time to unleash the data from the separate business systems and start the journey towards a modern Data Platform, what are the key things to consider? In almost all cases the modern data platform is built on top of public cloud platforms from Microsoft, Amazon and Google. There specific components and solutions inside the Data Platform vary, but there are couple of key principles that are shared.

Don’t build your data platform like you would build an industrial investment

Unlike when designing physical refineries, data refineries should not be thought of as a giant investment to be built all at once. Modern cloud platforms and software enable a much more agile and flexible way of developing. It is recommended to break down the construction of the data platform into smaller projects. Each project should provide real and usable value.

We start from a small, but valuable use-case so that we can see tangible results at an early stage and make the data visible to the business. After that, we will start expanding one piece at a time to scale up the solution. These use-case driven projects start from sourcing the data and end in practical and usable knowledge. Arranging the work like this, makes the projects much easier to understand and transparent to everyone.

It’s of course good to think about the overall architecture from the very beginning so that it works efficiently also in a larger and more complex situation. However, a surprisingly large part of this work can be carried out in parallel with the delivery of first use cases. The selected technologies and working methods must be adapted so that the new work can be built smoothly on top of and alongside the old without a conflict.

Make sure that the Data Platform is built with sound development methodologies

A successful data platform project requires a clear understanding of goals and needs, the right know-how and technology. In addition, efficient development methodologies are needed.

It is industry standard to use agile development methodologies. However, there is more to the story. In order to really gain benefits of modern ways of working the toolkit should include all the key elements from DevOps to automated testing, publishing, and documentation. These not only enhance and accelerate development, but also ensure that the data platform becomes easy to maintain and manage. A well-functioning development methodology also brings the business experts into the data work, as they know business needs best and are also most likely to find new ways to use data for the benefit of the business.

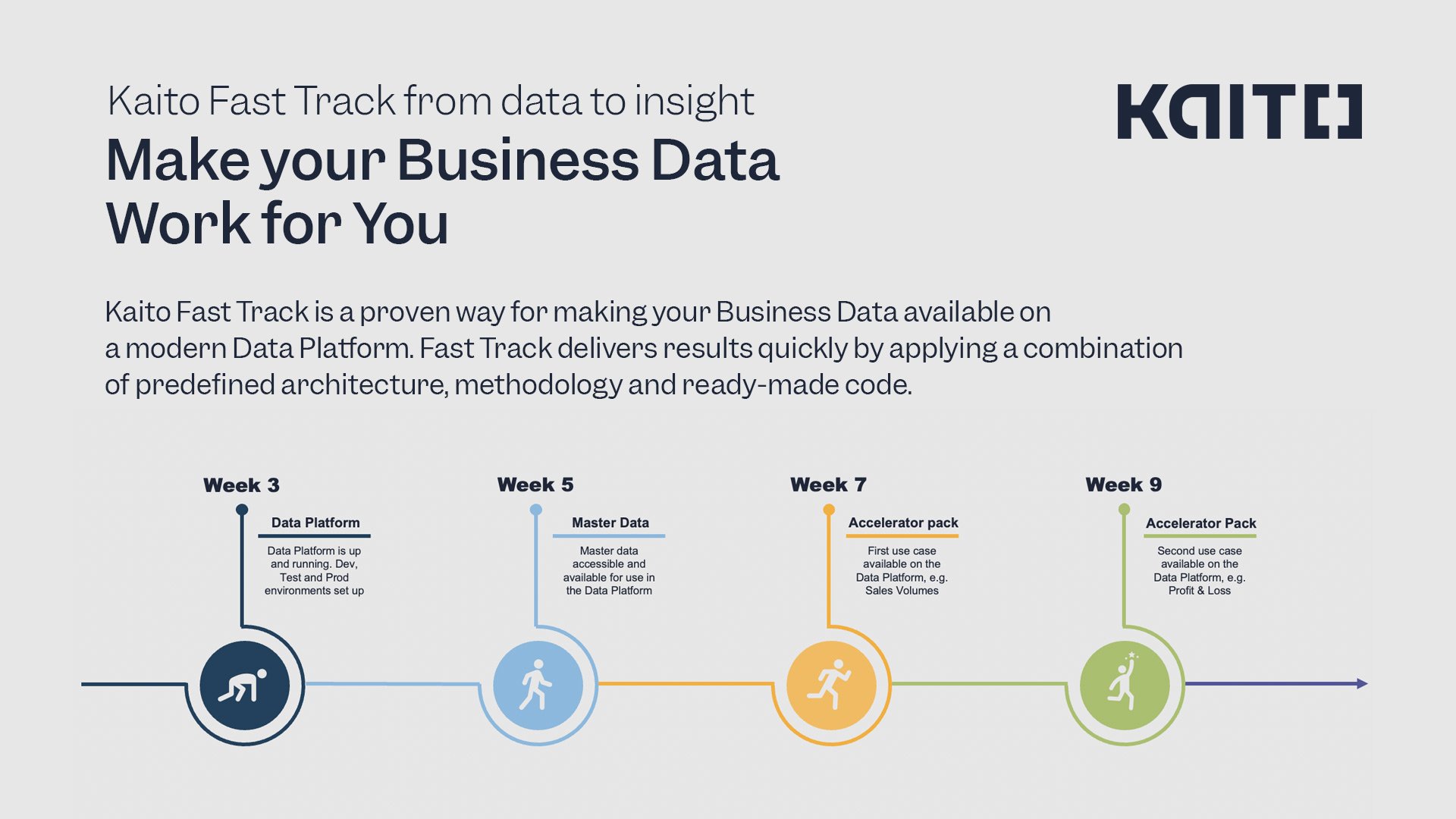

Our own productized approach in to building modern Data Platforms

Modern cloud-based services enable a quick and cost efficient to kick-start a cloud data platform initiative. At Kaito we have productized our approach in building a Data Platform. The Kaito Fast Track to Insight productized service consists of a predefined data platform architecture, ready made code base, templates and an agile delivery methodology. This allows quick delivery of concrete results and a framework for delivering continuous value to the organization. Our Fast Track reference architecture is fully scalable and it’s easy to expand into new use cases piece by piece. I’m happy to show more and hear your thoughts. You can find more about our Fast Track from here.

Johan Wikström

VP of Business Analytics @ Kaito Insight